I wanted to show readers how they can reduce their Amazon S3 costs if they are hosting static content on Amazon Web Services S3.

One of the nice functions of S3 is that it will allow you to version a bucket. For a blog site, this is a great feature that can save you if you accidentally commit a change that causes major issues on your site. However, one draw back is that each time you commit a change, a new version is created. If your site has many large files, that can add up quickly, so how can you ensure you only keep data as long as you need it?

S3 Lifecycle rules

In the S3 console for your bucket, you can enable a lifecycle rule that will expire out old versions of an object after a given window of time.

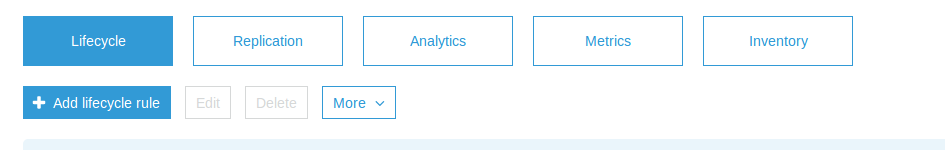

First select your bucket in the S3 console and then choose the “Management” tab.

Next select the Lifecycle Button and then press the “+ Add lifecycle rule” below it.

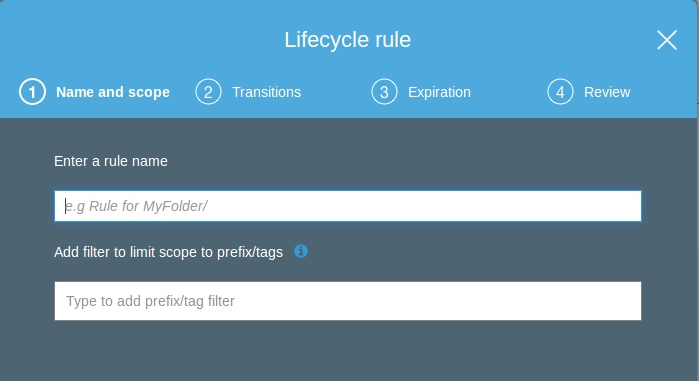

Enter a name for the rule and you can skip the prefix option if you want this to impact the entire bucket, then press next.

We can skip the 2nd screen which is “Transistions” because we dont want to move the old versions to S3 infrequent access or glacier, we want to delete them, so press next again.

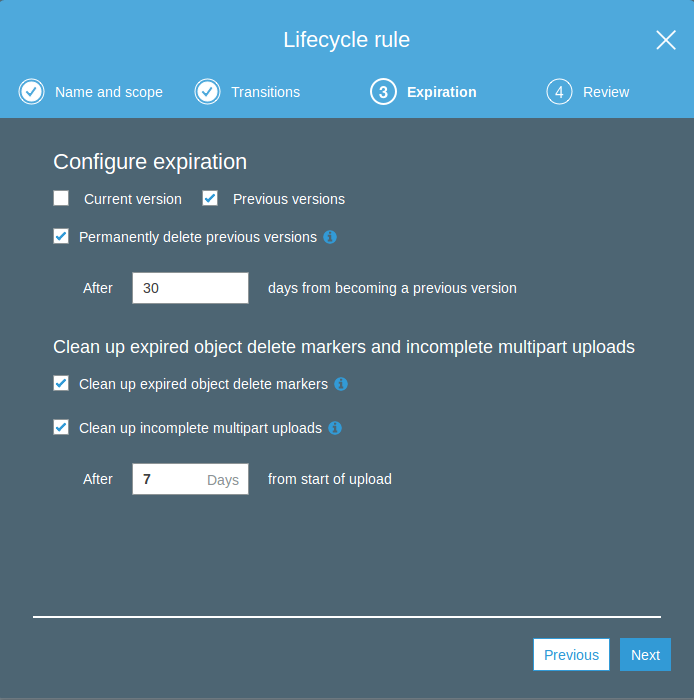

In my example, we are going to remove all old versions 30 days after they were last versioned, and we are going to select the permanently delete option. Note we also use the clean up options that are on the lower screen as well.

That’s it, simply select next, review and then complete the rule.